To make such data scientifically usable for training AI models or conducting statistical analyses they need to be annotated. This means enriching them with additional information, starting with identifying the species.

This was the focus of last week's EcoinfoFAIR conference, an annual event bringing together experts in informatics applied to ecology. Over three days, participants gathered at the CNRS Station for Theoretical and Experimental Ecology in Moulis.

The event aimed to collectively envision what an ideal, sustainable solution for annotating image and sound data of species might look like in the future.

It provided an opportunity to review around fifteen existing French solutions and projects in this field, including DeepFaune, ecoSound-web, Ocean-Spy, SpiPoll, and PlantNet.

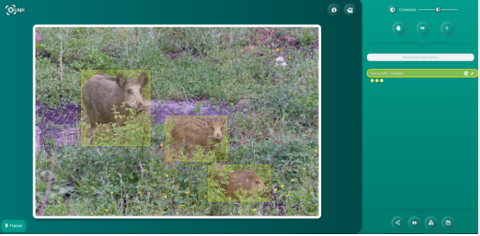

Our colleagues, Julien Ricard et Guillaume DEBAT presented Ocapi, our AI-assisted web platform for annotating camera trap data.

And since we were in the area, we took the chance to collect acoustic data in the field, gathered by audio sensors deployed as part of the Psi-Biom research project.